Question #3a497

1 Answer

Entropy is a notion used to describe the amount of disorder (or randomness) in a system. The basic principle is this: high disorder equals high entropy, while order equals low entropy.

An example of this is water in its three phases of matter- solid, liquid, and gas. Solid water has a lower entropy than liquid water, which in turn has a lower entropy than vapor.

So, an example of a system with low entropy is ice. In ice, molecules are packed closely together and are not free to move around - the system has very little disorder.

On the other hand, when water goes from liquid to vapor, the molecules are no longer stuck together and can now move freely around, almost independently of one another. The disorder of the system has increased, which means that this is now a system with high entropy.

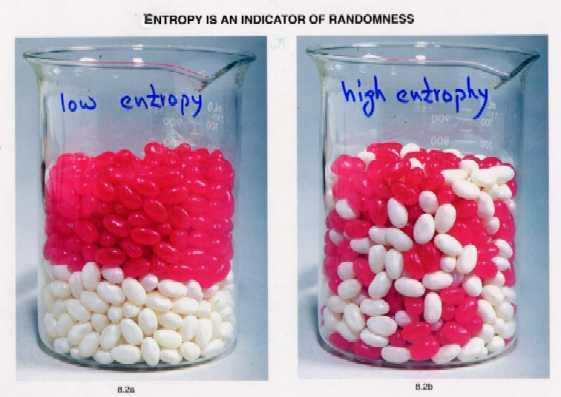

Likewise, entropy is an indicator of randomness. Think, for example, of two jars in which you have two type of beans. In one jar the beans are stacked in layers, while in the other the beans are mixed together.

The jar in which you have less randomness will have less entropy, while the jar in which you have more randomness will have more entropy.

One last example. Think of the soldiers that take place in a military parade and of the crowd that attend a concert.

Once again, less disorder and less randomness equal less entropy.

More disorder and more randomness equal more entropy.