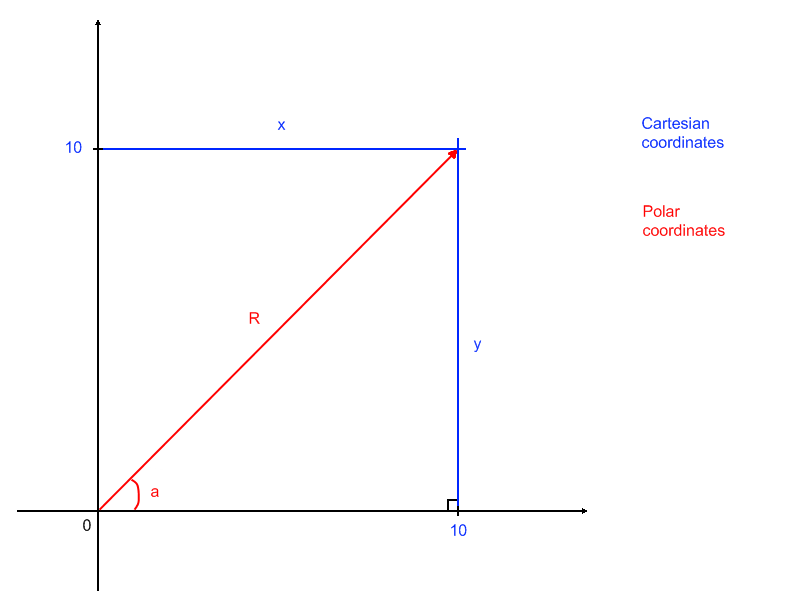

How do you convert the Cartesian coordinates (10,10) to polar coordinates?

1 Answer

Aug 6, 2015

Cartesian:

Polar:

Explanation:

The problem is represented by the graph below:

In a 2D space, a point is found with two coordinates:

The cartesian coordinates are vertical and horizontal positions

The polar coordinates are distance from origin and inclination with horizontal

The three vectors

In your case, that is: