What could go wrong with Newtons Method?

Why are these conditions are true? Newton's method works very well if f' is not too small, f'' is not too big, and if our initial guess is near the solution.

If we examine the graph of a function that when crosses the x-axis merely changes as x changes (slower than a linear function) and we start with an initial guess x1 as to where the graph intersects y=0, the tangent line we draw to the function at x1 intercepts the x-axis at an x-coordinate which is much further away than the exact value.

can we use linear approximations to solve equations of the form f(x)=0 instead of Newton's method when the function is not defined for x values near the solution. For example square root (x- square root of 5)

Suppose the graph of a function portrays the following behavior: concave down, becomes sharply curved and then concaves up. It happens that the root lies in the region where the graph is sharply curved, any tangent line drawn to the function to approximate its root will be the function itself and therefore will go through the root itself.

Why are these conditions are true? Newton's method works very well if f' is not too small, f'' is not too big, and if our initial guess is near the solution.

If we examine the graph of a function that when crosses the x-axis merely changes as x changes (slower than a linear function) and we start with an initial guess x1 as to where the graph intersects y=0, the tangent line we draw to the function at x1 intercepts the x-axis at an x-coordinate which is much further away than the exact value.

can we use linear approximations to solve equations of the form f(x)=0 instead of Newton's method when the function is not defined for x values near the solution. For example square root (x- square root of 5)

Suppose the graph of a function portrays the following behavior: concave down, becomes sharply curved and then concaves up. It happens that the root lies in the region where the graph is sharply curved, any tangent line drawn to the function to approximate its root will be the function itself and therefore will go through the root itself.

1 Answer

See below.

Explanation:

In some circumstances but not ever, the Newton method converges. An iterative process needs some additional conditions to be convergent. Those conditions can be sufficient or in the better, necessary-sufficient.

The sufficient conditions are the easier to find so we will try to explain how to obtain sufficient conditions for the Newton iterative process to be convergent.

Given

We say that

If

then if

Conclusion: Analyzing the behaviour of

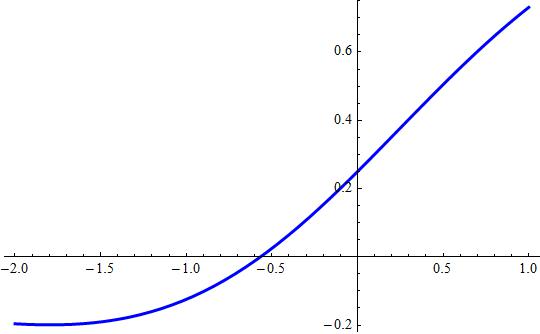

Ex. Determine the roots of

Here

As we know, the solution is

Attached the graphics for